#2: AI anxiety

Hey this thing is becoming smarter than me!

This is the first time I feel any anxiety about a development in technology. Usually, I will greet them with excitement “Oh wow it’s a pretty cool library I can play around with” or just a basic “Hmm this is really weird hype & but I’m pretty sure nothing substantial will come out of it”.

But the development in the past few years, particularly this past few months, has been hitting me on a different level. It is the first time I feel unsure of what I wanted to do in life, and whether I would be rendered meaningless in my lifetime.

The technology I’m talking about is of course AI, which is different from other technologies. Other technologies usually came in the form of a tool, but AI comes in the form of a surprisingly intelligent agent. Day by day, it is getting more human and more human. It just doesn’t consume energy the way we do, probably doesn’t need as much rest or personal life, and definitely can’t unionize (yet).

Let’s take a look at how ChatGPT paraphrases the above two paragraphs:

For the first time, I'm experiencing a flicker of anxiety about a development in technology. Usually, I'd greet them with enthusiasm, exclaiming, "Oh wow, what a cool library to tinker with!" or with a shrug, "Hmm, this feels like peculiar hype, but I doubt anything substantial will come of it."

However, the advancements made in recent years, particularly over the past few months, have struck me in an entirely different way. It's the first time I've felt uncertain about my path in life and wondered if I might become obsolete within my lifetime.

The technology in question, of course, is AI, which sets itself apart from other innovations. While other technologies arrive as tools, AI emerges as a surprisingly intelligent agent. Day by day, they become increasingly human-like. They don't consume energy the way we do, likely need less rest or personal time, and, for now, can't unionize (though never say never!).

I have to admit that my below-average writing just got made wittier and more compelling by AI. Its result is more humane, enticing, and grammatically better.

Experts said all the time that creative work will be one of the last jobs to be replaced but turned out they are dead wrong. Writers, painters, and 3D artists are getting more redundant these days. (Even the logo of this publication was drawn by Stable Diffusion).

AI could virtually do any white-collar job on earth better than the average person could. And let’s not count the future improvements as well. Even only dumping more training data into the model seems to significantly improve its capability, and some researchers observed that it possesses some sort of emergent capability.

Given that, what will happen then to our need for meaning and self-worth? Viktor Frankl says that humans need meaning to survive. And most humans find meaning in their work and in helping other people. If AI can do most of our work better and more people and companies prefer to be helped by AI, humans will just become background characters amidst the ever-improving species of AI systems that are taking more and more work on earth.

And we’re here not even talking about the existential threat of a God-like AI yet, but only an AI that can do a lot of things better than the average human.

I will probably have this AI anxiety with me for a long time, given that I’m currently dedicating a big chunk of my life to empowering underserved workers. Many of those workers, unsurprisingly, will be replaced by AI soon.

Top Gems

Last week, dozens of researchers and notable people (including Yoshua Bengio and Elon Musk among others) signed an open letter asking for a 6 pause for all advanced AI development. And only a couple of hours later, Eliezer Yudkowsky published an article at TIME arguing why we should shut all AI development down. It’s a pretty wild and entertaining week (although I’m deeply scared inside).

A veteran AI scientist came to the conclusion that the way we do AI research in the past few decades is ineffective, and we need to admit that we should let machines search & learn by themselves, not imbue them with our knowledge. AI researchers have often tried to build knowledge into their agents. This went well in the short term, but the eventual big breakthrough eventually came by scaling computation on which the AI will run their search and learnings.

Surprisingly, Matt Welsh (a veteran computer scientist, portrayed in the Social Network movie as the one who taught Mark Zuckerberg in an Operating Systems class), was very progressive in his view that probably opposed his entire lifetime of researching and teaching programming since the 80s. In the ACM article, he argued that classical programming, yes this is the programming we know, is dead.

He profoundly predicts that “The engineers of the future will, in a few keystrokes, fire up an instance of a four-quintillion-parameter model that already encodes the full extent of human knowledge (and then some), ready to be given any task required of the machine. The bulk of the intellectual work of getting the machine to do what one wants will be about coming up with the right examples, the right training data, and the right ways to evaluate the training process.”

Given how I am interacting with GPT-4 API & ChatGPT, I may find his prediction coming very soon.

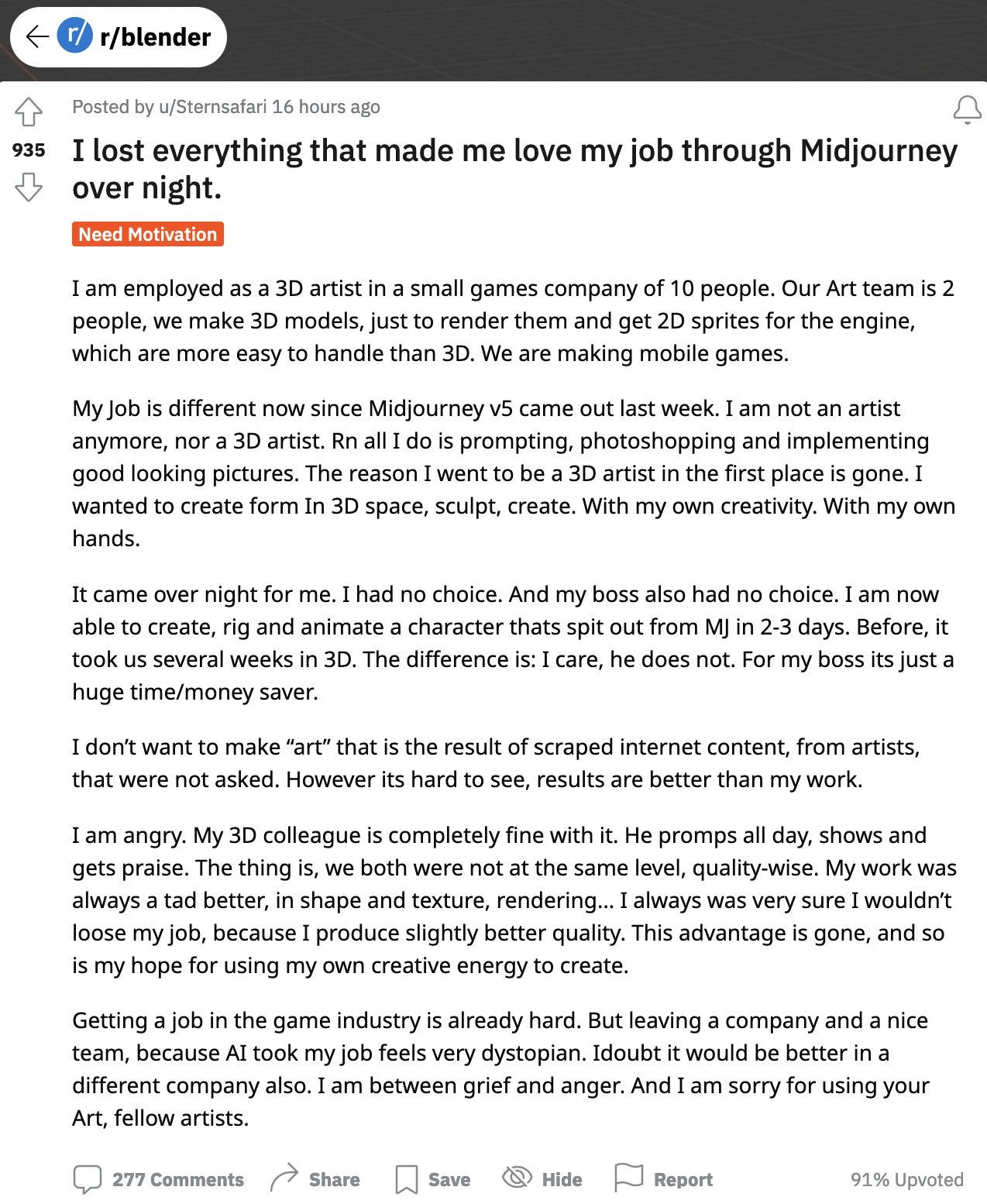

A sad story that every time there’s a breakthrough innovation, there will be new jobs created and new jobs lost. We may only count them in percentages or statistics if we are not affected by them. We need to discuss this challenge with more empathy, imagine if we are the ones whose jobs are lost to AI. We will find it harder to look for jobs, and we will struggle to bring food to our family’s dinner table. See the heated discussion on Hacker News.

This post may be a bit dark, but I believe it will be even darker in the coming few years as we are unprepared for whatever is coming next. However, historically speaking (sample size=?), humans should be able to adapt to any innovations coming their way 💡

Enjoy the long weekend my friends!