#3: Bye tool AIs, say hi to autonomous agent AIs

AI can now create, prioritize, and complete tasks for us. So what do we do now?

Hey everyone! In the last issue, I shared my thoughts on being human in the age of ever-smarter AI systems. Every time I meet people in person, there will always be a moment where the discussion evolves into AI, no matter who I talk to. I think this is an interesting development, and with even faster information flow through online channels like Twitter and TikTok, cutting-edge technologies can be used by the mass in record time. Probably you know that more than 100m people (>1% of humans on earth) have used ChatGPT within only two months after launch. We as technologists no longer have the monopoly of cutting-edge techs, it's a first-mover advantage at best.

AI as agents

Most of the AIs in the market right now can still be called tools, just like mobile phones or the internet. We mostly control what they do through the input we provide and the interactions we have through their user interfaces. In using apps we control them through their UI, and in using AIs, for example, ChatGPT, we control them through the prompts we give. We can chain the sequence of prompts and results, but we do them manually.

Last week there has been some cool new projects that push AI from being just a tool into AI being an agent. You can provide a high-level goal, and the AI will figure out multi-step and continuous ways to run and optimize toward that goal. And given that AI can now interact with the whole internet and possibly through the outside world, the possibility is almost endless. And given that AI is becoming more multimodal (meaning it can support not just text but also images, sounds, and videos), we will have interactive & smart agents. Very similar to Tony Stark's JARVIS.

Tool: an instrument used to carry out very specific tasks.

Agent: an entity that is able to create tasks, prioritize tasks, complete tasks, and go in an autonomous loop until its goal is achieved.

What's out there

Here are the popular AI agent projects:

Created by Toran Richards, this experimental open-source option attempts to make GPT-4 autonomous. It can connect to the internet, use apps, use long-term & short-term memory, and more.

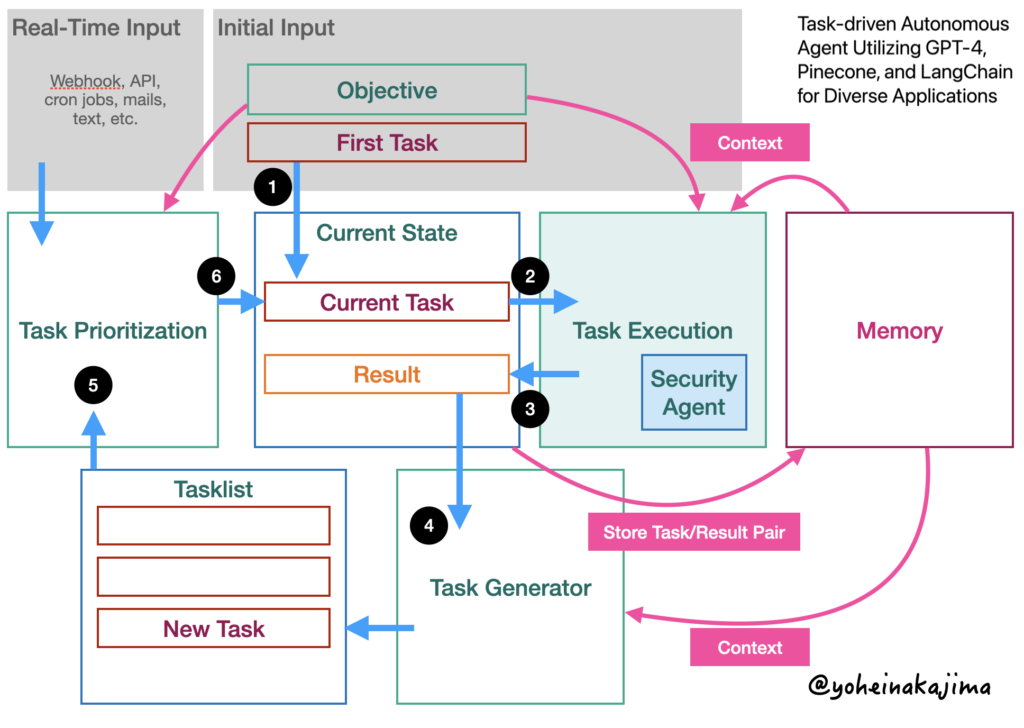

Created by Yohei Nakajima, this one is pretty elegant with only ~200 lines of code. It can complete tasks, generate new tasks based on results, and prioritize tasks in real time.

JARVIS (hey Tony!) is pretty similar to the above two but it’s built by Microsoft and HuggingFace, so I suspect it will have stronger integration to ChatGPT & Azure cloud ecosystem.

All three are open-sourced and are supposed to enable autonomous workflows powered by LLMs. AutoGPT was skyrocketing on GitHub last week with almost 100 thousand stars already, making it the fastest-growing repository there. Some forks have made it into the mainstream news, mostly exaggerating how they can try to break free from their sandbox or try to end humanity.

I also played around with Langchain, a framework for building programmatic and agent-based AIs. Langchain provides a simple abstraction for working with LLMs and makes it easy to construct complex sequences on top of it. It's surprisingly easy to use, just importing their library in Python.

If you are curious to try AI agents but have no time to set up the toolings locally by yourself, you can try AgentGPT which is available online. It can do complex sequences and runs autonomously for a while, but it's pretty limited in what it can accomplish since it's isolated from the outside world.

In the screenshot above, I'm running AgentGPT with the goal of learning Flutter so that I can be a strong mobile engineer at established tech companies. After a couple of minutes of running, it provided me with a learning plan, a list of courses to take, and what exercises I can do to practice my Flutter skill. I tried with some more goals, and it pretty much gives me a solid plan to achieve them. Imagine if we give an AI agent free access to use our computer and all of the apps inside. More extremely, we can give it access to all our social media profiles and credit card information. Imagine what we can accomplish (and how fast our money runs out if the agent runs off course).

From doing a task to achieving a goal

Using ChatGPT now feels like when I first learned to program. When we engineers started learning to program, we started by building for the command line. We read from the standard input (keyboard), then the input will be processed by our program, then the output will show up in the standard output (monitor). REPL shells like those in interpreted languages also run the same way. It's pretty much analogous to the ChatGPT interface. You prompt something, the AI thinks, then you get the result.

In programming, if you want to build more complex and more useful software, then you need to compose multiple functions and even multiple services that will interact with each other. However, we still need to exactly code the sequence of actions that the program will be running. This complex software building is similar to building an AI agent. The difference is that in complex software, the tasks are explicitly programmed down to the deepest level (even if it calls other libraries, they are still codes, and if it calls binaries, then they are still machine codes). In building an AI agent, the sequences of tasks are generated autonomously by the AI and it can run without being micromanaged by us humans.

I'm super interested in how the abstraction will be in LLM chain programming. If traditional data structures are deterministic, we can probably build something novel for the non-deterministic nature of prompts & results. Probably similar to handling unknown results or waiting for an API call to finish using the Maybe & Future construct in functional programming, but LLMs can have an infinite possibility of future results.

What's next

I believe we are entering a new era of trying to fuse explicit procedure-making by humans, with non-deterministic autonomous decision-making by AI. Overall, I'm pretty excited about what's coming next.

The current AI systems, especially LLMs, still have a lot of limitations, such as being very inefficient to train, having shallow reasoning capability, and their tendency to make up stuff. Most notably, AI doesn’t have a good understanding of the world just yet, Prof LeCun even said that house cats are better at it than LLMs. However, if we are talking about an agent that's supposed to live and work in the digital world, I believe it doesn't need to have a bigger understanding beyond that.

Our mind is a stochastic decision-making & generation engine, and so is AI (at least for LLMs). With this in mind, I believe more and more work in the digital world, however complex will be able to be automated in some way. Instead of hiring a human worker, we might just spawn an AI agent to do that. In a few months, AI agents will do the work that millions of people are currently working on. In a few years, we might find ourselves working for these AI agents.

Given how many real people’s jobs are going to be impacted, I think we need to think seriously about re-training impacted workers. We also need to figure out how to educate our younger graduates on how to do well in this seemingly unpredictable future.

Top finds

1. "Computer is like a bicycle for our minds", by Steve Jobs

In this quick video, Steve originally believes that humans are ingenious not because of our raw capability but because of how we can build tools that can augment our capability. No matter how impressive technology is going to be, I still subscribe to Steve Jobs’ idea that technology should augment our human capability to pursue grander goals that we otherwise couldn’t pursue.

2. Note to Haystack VC portfolio on AI, by Semil Shah

Semil wrote a very useful guide for his VC’s portfolio companies on harnessing AI, or else they will be disrupted. I find the case studies and references insightful to my current thinking on incorporating AI in my company.

3. SudoLang: A Powerful Pseudocode Programming Language

We all know it, prompt engineering is more frustrating than debugging. In debugging, we can take a look at the underlying source code, but in prompt engineering, we are dealing with a black box. SudoLang can provide a more easily understandable and interpretable structure to communicate with GPT-4.

I drafted this newsletter issue a couple of days ago, but yesterday Matt wrote this AI agent explainer that’s pretty beginner-friendly. It solidifies my understanding and if you came from a non-technical background, you might want to give it a read.

That’s it for this week folks! I will be coming to my hometown this weekend to spend the Eid al-Fitr holiday with my family, so I might be late in replying to your comments and messages. I hope you are well and healthy wherever you are!

If you find this piece useful and want your friends or team members to know firsthand about these bleeding-edge autonomous agents, feel free to forward it!